P*rn Bans Won’t Save Kids—But They Could Kill Internet Freedom

Governments Are Using “Protect the Kids” as a Trojan Horse for Internet Control

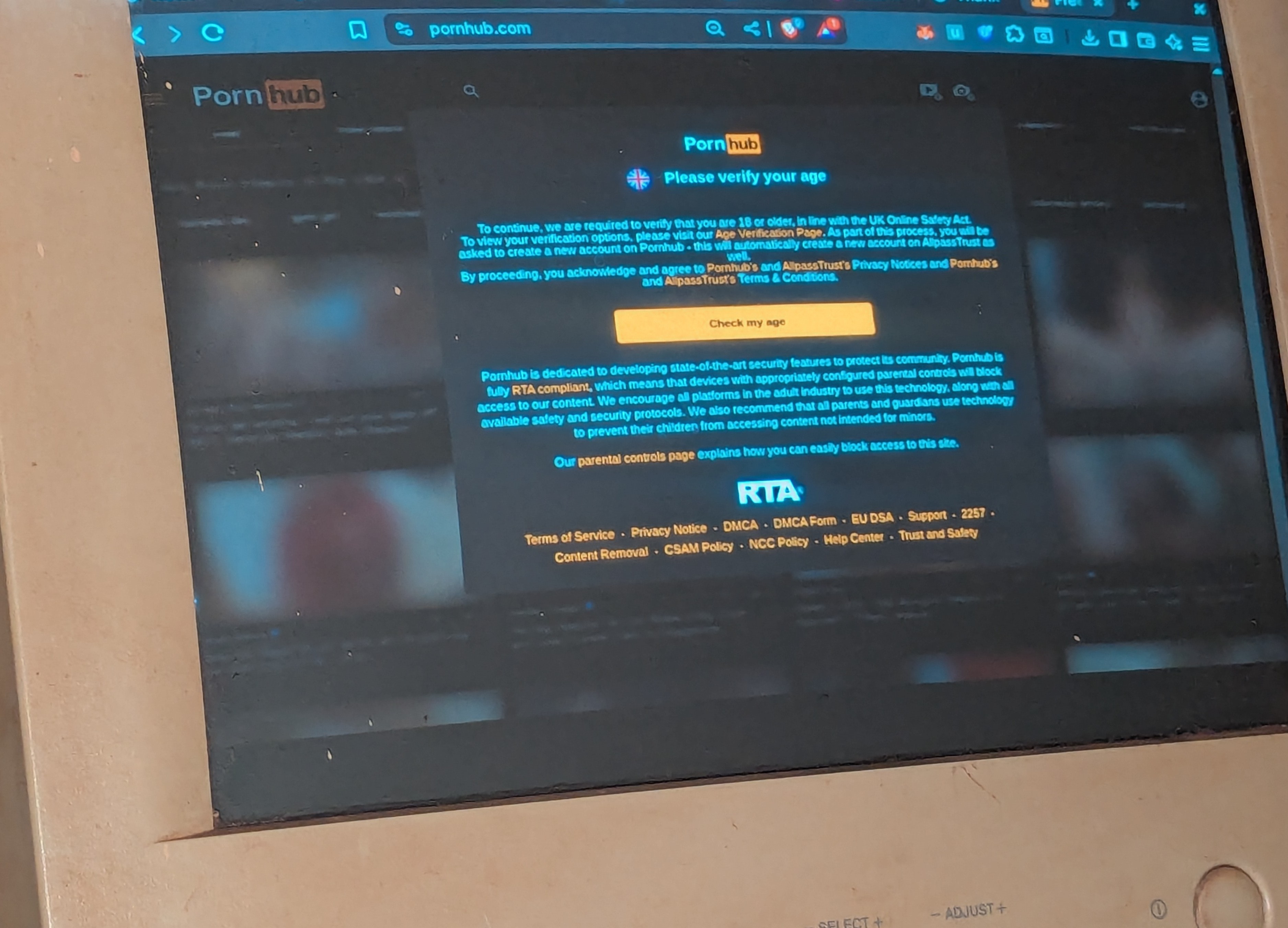

Governments everywhere are cracking down on “gooners” watching explicit content. Sounds noble, right? Protect the kids, save the future.

Wrong.

This isn’t just about kids. This is about control. Once these censorship tools are in place, they won’t stop at porn. They never do. It’s the same playbook every time—start with something everyone agrees is bad, then slowly expand it until you’re deciding what adults can and can’t see.

And here’s the kicker: it won’t even work. Kids will just move to illegal channels. Dark web. Shady forums. AI-generated workarounds. The exact places that are dangerous.

These laws are being written by tech-illiterate politicians who couldn’t explain what a VPN is if their re-election depended on it. Instead, they listen to lobbyists whispering, “Just shut it down and the problem goes away.”

News flash: it doesn’t. We’ve seen this before—Silk Road, Pirate Bay, torrents. Every crackdown just pushes things underground and makes them harder to stop.

With AI, the same kids they’re trying to “protect” will be the ones running those underground sites. And this time, they’ll be smarter, faster, and untouchable.

We’re not heading toward a safer internet. We’re heading toward a censored one. And in trying to protect the future, they might just destroy it.

The “Protect the Kids” Argument Sounds Noble—Until You Dig Deeper

Politicians frame these measures as moral shields. In practice they establish identity checkpoints, surveillance hooks, and centralized blocklists. Once the scaffolding exists, adding new categories (extremism, “misinformation,” dissent) is a policy memo, not a technical lift.

Why Bans Push Kids Toward More Dangerous Spaces

Forced friction doesn’t eliminate demand; it reroutes it. Teen curiosity + blocked surface web = migration to:

- Proxy / VPN hopping

- Shady mirror sites packed with malware

- Encrypted chat channel trading

- AI-generated explicit content with zero provenance

Risk increases; oversight decreases.

Tech-Illiterate Politicians Are Driving Policy

Legislation drafted without protocol literacy produces:

- Overbroad definitions (collateral damage to legitimate communities)

- Impossible enforcement burdens on small platforms

- Incentives for age / ID tracking (privacy erosion baked in)

- Vendor lock-in to “compliance” scanning suites

History Repeats: From Silk Road to Pirate Bay

Every blitz attempt:

- Seize / block

- Traffic dips briefly

- Forks, mirrors, decentralization

- Tools harden & spread

- Net resilience improves for everyone—including bad actors

Crackdowns are free R&D for distribution hardening.

The Internet We’re Heading Toward Isn’t Safer—It’s Smaller

Stack risk if this trajectory continues:

- Mandatory identity rails for adult content = spillover to all commerce

- Normalization of client-side scanning

- AI models tuned to flag “undesirable” categories beyond the original scope

- Reduced innovation as startups can’t afford compliance overhead

What Actually Helps

- Education > prohibition

- Support parents with open-source filtering they control (not centralized state pipes)

- Invest in resilience & digital literacy

- Narrowly-scoped, technically verifiable rules (sunset clauses, transparency reports)

AI Will Accelerate Evasion

Open-source generative models + prompt engineering = endless morphing supply. Detection pipelines become adversarial games; kids learn faster than regulators iterate.

Call It What It Is

It’s infrastructure for governance over attention flows. If you value open ecosystems, pay attention now—before “just this one category” becomes the default rationale for universal content adjudication.